Non-von Neumann Architectures

After three-quarters of a century of impressive progress in sequential processing, limitations are emerging in the approach defined by John von Neumann in 1945. Although new technologies have advanced the basic model, and embraced massively parallel augmentation of acceleration, management of resources is becoming much more difficult with core counts numbering in the millions. Many past efforts have explored this space (reduction machines, dataflow architectures, stream processing, message-passing, function programming approaches); our primary focus is in exploring emerging neuromorphic architectures which deploy a neural net philosophy.

Cognitive Computing

Designers began mimicking biological approaches in order to understand the energy and parallelism naturally expressed in nature. The neuromorphic concept was developed by Carver Mead at Caltech in the late 1980s describing the use of VLSI systems containing electronics that imitated neuro-biological architectures present in the central nervous system. Recently the term has evolved to describe analog, digital, and mixed-mode analog/digital VLSI and software systems that implement models neural systems for perception, motor control, multisensory integration, and of most interest in this context, cognitive computation.

Designers began mimicking biological approaches in order to understand the energy and parallelism naturally expressed in nature. The neuromorphic concept was developed by Carver Mead at Caltech in the late 1980s describing the use of VLSI systems containing electronics that imitated neuro-biological architectures present in the central nervous system. Recently the term has evolved to describe analog, digital, and mixed-mode analog/digital VLSI and software systems that implement models neural systems for perception, motor control, multisensory integration, and of most interest in this context, cognitive computation.

Neural Networks

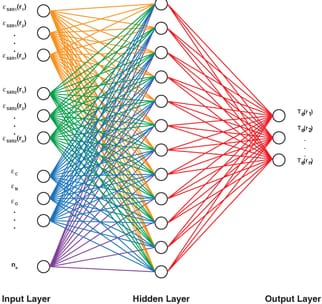

Artificial neural nets are being developed and for tasks involving deep learning, machine learning, image recognition, voice processing, and other suitable tasks. Two broad methods are utilized: programs that simulate the action of biological elements, and hardware to physically emulate the activity of natural neural cells.

The former method has achieved breakthrough successes in classification tasks and explored the mathematical landscape by exploiting the power of current-generation GPUs. Techniques for back-propagation, recurrence, deep layers, convolution, etc. all necessitate extraordinary computational capability but are yielding ever-increasing performance on pattern matching tasks.

The second method, hardware emulation, can be subdivided into two classes: analog representations mimicking biological manipulation of charge within cells, and digital circuits which encode values and operate to functionally generate the appropriate summations and integrations. The latter is especially interesting because it leverages the rapid advances in semiconductor development (primarily CMOS). This hardware is both faster and lower power than simulation of von-Neumann machines. But for emulation the challenge is in "programming" the devices and matching the available technology to the problem at hand, which is generally done nearly manually.

Artificial neural nets are being developed and for tasks involving deep learning, machine learning, image recognition, voice processing, and other suitable tasks. Two broad methods are utilized: programs that simulate the action of biological elements, and hardware to physically emulate the activity of natural neural cells.

The former method has achieved breakthrough successes in classification tasks and explored the mathematical landscape by exploiting the power of current-generation GPUs. Techniques for back-propagation, recurrence, deep layers, convolution, etc. all necessitate extraordinary computational capability but are yielding ever-increasing performance on pattern matching tasks.

The second method, hardware emulation, can be subdivided into two classes: analog representations mimicking biological manipulation of charge within cells, and digital circuits which encode values and operate to functionally generate the appropriate summations and integrations. The latter is especially interesting because it leverages the rapid advances in semiconductor development (primarily CMOS). This hardware is both faster and lower power than simulation of von-Neumann machines. But for emulation the challenge is in "programming" the devices and matching the available technology to the problem at hand, which is generally done nearly manually.

Brain-Inspired Architecture

It is our assertion that future computing architectures must embrace elements of biologically-motovated computing, yet retain the legacy of many decades of von-Neumann evolution. Our approach is to initially enable conventional platforms with ANN accelerators reachable via standard OS protocols and mechanisms--a hybrid platform. Most new information (Big Data) is now arriving in unstructured forms such as video, images, symbols, and natural language, and this approach is an attempt to apply Artificial Intelligence approaches to process, discover, and infer patterns present in the data with minimal a priori knowledge. Machine Learning and automated data classification are now heavily utilized for social media, and our focus is to aid in applying those mechanisms for discovery.

Several promising hardware efforts are emerging, such as our colleagues currently developing the IBM Research TrueNorth chip (we are well acquainted having been privy under NDA to development on the NS1e).

We have a long-standing relationship with General Vision to deploy NeuroMem® neuron structures designed around selected tasks of interest. By embracing neuromorphic principles we can gain insight into the morphology of individual neurons, circuits, and overall architectures, and how this in turn creates desirable computations, affects how information is represented, influences robustness to damage, incorporates learning and development, exhibits plasticity (adaptation to local change), and facilitates evolutionary modifications.

Additionally, we are extending the Caffe deep learning framework developed by the Berkeley Vision and Learning Center and targeting available hardware digitally emulated neurons.

®Registered technology of Norlitech, LLC

It is our assertion that future computing architectures must embrace elements of biologically-motovated computing, yet retain the legacy of many decades of von-Neumann evolution. Our approach is to initially enable conventional platforms with ANN accelerators reachable via standard OS protocols and mechanisms--a hybrid platform. Most new information (Big Data) is now arriving in unstructured forms such as video, images, symbols, and natural language, and this approach is an attempt to apply Artificial Intelligence approaches to process, discover, and infer patterns present in the data with minimal a priori knowledge. Machine Learning and automated data classification are now heavily utilized for social media, and our focus is to aid in applying those mechanisms for discovery.

Several promising hardware efforts are emerging, such as our colleagues currently developing the IBM Research TrueNorth chip (we are well acquainted having been privy under NDA to development on the NS1e).

We have a long-standing relationship with General Vision to deploy NeuroMem® neuron structures designed around selected tasks of interest. By embracing neuromorphic principles we can gain insight into the morphology of individual neurons, circuits, and overall architectures, and how this in turn creates desirable computations, affects how information is represented, influences robustness to damage, incorporates learning and development, exhibits plasticity (adaptation to local change), and facilitates evolutionary modifications.

Additionally, we are extending the Caffe deep learning framework developed by the Berkeley Vision and Learning Center and targeting available hardware digitally emulated neurons.

®Registered technology of Norlitech, LLC

Our Approach

The key factor in our technology is the ability to implement artificial intelligence in silicon, moreover, to perform dynamic online learning at low power. Next silicon is due 2H22 for testing.

In order to address emerging semiconductor technology limitations, future computing hardware will likely have certain characteristics. First, due to leakage with diminishing feature size processors will deploy massive parallelism, but do so at the process level and not simple core replication. Second, energy lost to data transfer implies minimizing movement by computing within or very near data--this can be accomplished by specialized circuitry for specific tasks. Massive resources require different programming techniques--at best none at all in a self-training regime. Dedicated hardware is an anathema to the programming community, and in order to succeed must have some form of visibility to the algorithm developer. Finally, a hybrid transition will accelerate adoption. Our view is to attach programmable hardware neurons to conventional von Neumann processors as cognitive computing accelerators reachable via conventional OSes.

We are actively designing toward the above goals (more on Portfolio pages):

The key factor in our technology is the ability to implement artificial intelligence in silicon, moreover, to perform dynamic online learning at low power. Next silicon is due 2H22 for testing.

In order to address emerging semiconductor technology limitations, future computing hardware will likely have certain characteristics. First, due to leakage with diminishing feature size processors will deploy massive parallelism, but do so at the process level and not simple core replication. Second, energy lost to data transfer implies minimizing movement by computing within or very near data--this can be accomplished by specialized circuitry for specific tasks. Massive resources require different programming techniques--at best none at all in a self-training regime. Dedicated hardware is an anathema to the programming community, and in order to succeed must have some form of visibility to the algorithm developer. Finally, a hybrid transition will accelerate adoption. Our view is to attach programmable hardware neurons to conventional von Neumann processors as cognitive computing accelerators reachable via conventional OSes.

We are actively designing toward the above goals (more on Portfolio pages):

- IP is being integrated onto conventional FPGA platforms for OS integration and benchmarking (tightly coupled both with ARM and RISC-V processor cores and associated full-stack software). We additionally are exploring integration within SSD platforms.

- Partners are collaboratively working on next generation silicon with licensed IP with taped-out in August 2022 in coordination with TSMC foundry (55 nm).

- Active design is underway on rev 2 of the PCIe accelerator card for data analytics, proceeding in parallel with a NLP analysis task,

- We are in the design specification stage in partnership with a memristor startup for a test article 1H19 tape-out embedding hardware neurons into active memory arrays (suspended due to COVID, but may restart).

- In the HPC space, we are developing a fast ASIC under the eFabless model with maximum throughput.